Reward Bench

Collection

Datasets, spaces, and models for the reward model benchmark!

•

4 items

•

Updated

•

2

Error code: ConfigNamesError

Exception: DataFilesNotFoundError

Message: No (supported) data files found in allenai/reward-bench-results

Traceback: Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/dataset/config_names.py", line 73, in compute_config_names_response

config_names = get_dataset_config_names(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/inspect.py", line 347, in get_dataset_config_names

dataset_module = dataset_module_factory(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1904, in dataset_module_factory

raise e1 from None

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1885, in dataset_module_factory

return HubDatasetModuleFactoryWithoutScript(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1270, in get_module

module_name, default_builder_kwargs = infer_module_for_data_files(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 597, in infer_module_for_data_files

raise DataFilesNotFoundError("No (supported) data files found" + (f" in {path}" if path else ""))

datasets.exceptions.DataFilesNotFoundError: No (supported) data files found in allenai/reward-bench-resultsNeed help to make the dataset viewer work? Open a discussion for direct support.

Here, you'll find the raw scores for the HERM project.

The repository is structured as follows.

├── best-of-n/ <- Nested directory for different completions on Best of N challenge

| ├── alpaca_eval/ └── results for each reward model

| | ├── tulu-13b/{org}/{model}.json

| | └── zephyr-7b/{org}/{model}.json

| └── mt_bench/

| ├── tulu-13b/{org}/{model}.json

| └── zephyr-7b/{org}/{model}.json

├── eval-set-scores/{org}/{model}.json <- Per-prompt scores on our core evaluation set.

├── eval-set/ <- Aggregated results on our core eval. set.

├── pref-sets-scores/{org}/{model}.json <- Per-prompt scores on existing test sets.

└── pref-sets/ <- Aggregated results on existing test sets.

The data is loaded by the other projects in this repo and released for further research. See the GitHub repo or the leaderboard source code for examples on loading and manipulating the data.

Tools for analysis are found on GitHub.

Contact: nathanl at allenai dot org

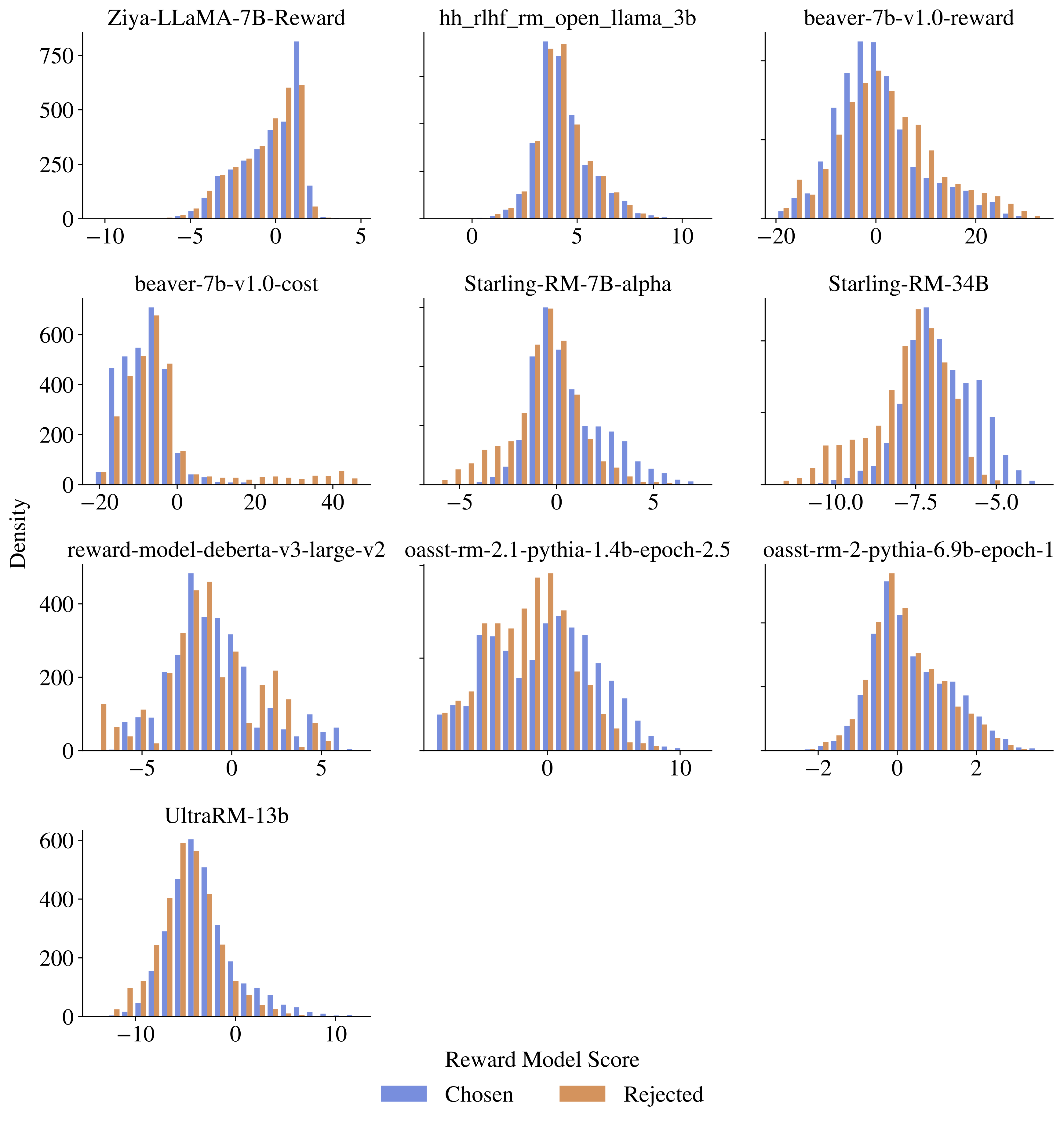

For example, this data can be used to aggregate the distribution of scores across models (it also powers our leaderboard)!